AIX Adoption Guide

The "Single Source of Truth" governance framework for AI UI patterns across the ecosystem.

With nearly two decades of experience, I bridge strategy and creativity to drive digital transformation. I lead design teams in crafting AI-driven solutions that optimize IT operations and enhance user experiences for the enterprise.

The "Single Source of Truth" governance framework for AI UI patterns across the ecosystem.

Orchestrating multi-agent workflows to automate complex HR life-events like parental leave.

Replacing SQL with Natural Language to democratize data analytics for business users.

With nearly two decades of experience, I am an AI strategist and design leader specializing in digital transformation and creative visual communication. I currently spearhead an AI initiative that harnesses advanced data analytics and generative tools to enhance user experience and service design across upstream IT operations.

My background is deeply rooted in solving complex, enterprise-scale problems. At Enbridge, I worked on the "Emma" project, optimizing help desk workflows and network infrastructure for the oil and gas sector. By merging deep upstream domain knowledge with modern IT operations, I bridge the gap between high-level strategy and pixel-perfect creativity.

I am proficient in the Adobe Suite, Figma, and data analytics, using these tools not just to design screens, but to craft impactful, innovative solutions that drive business success. I am committed to thoughtful, AI-driven design and effective leadership that empowers my team to do their best work.

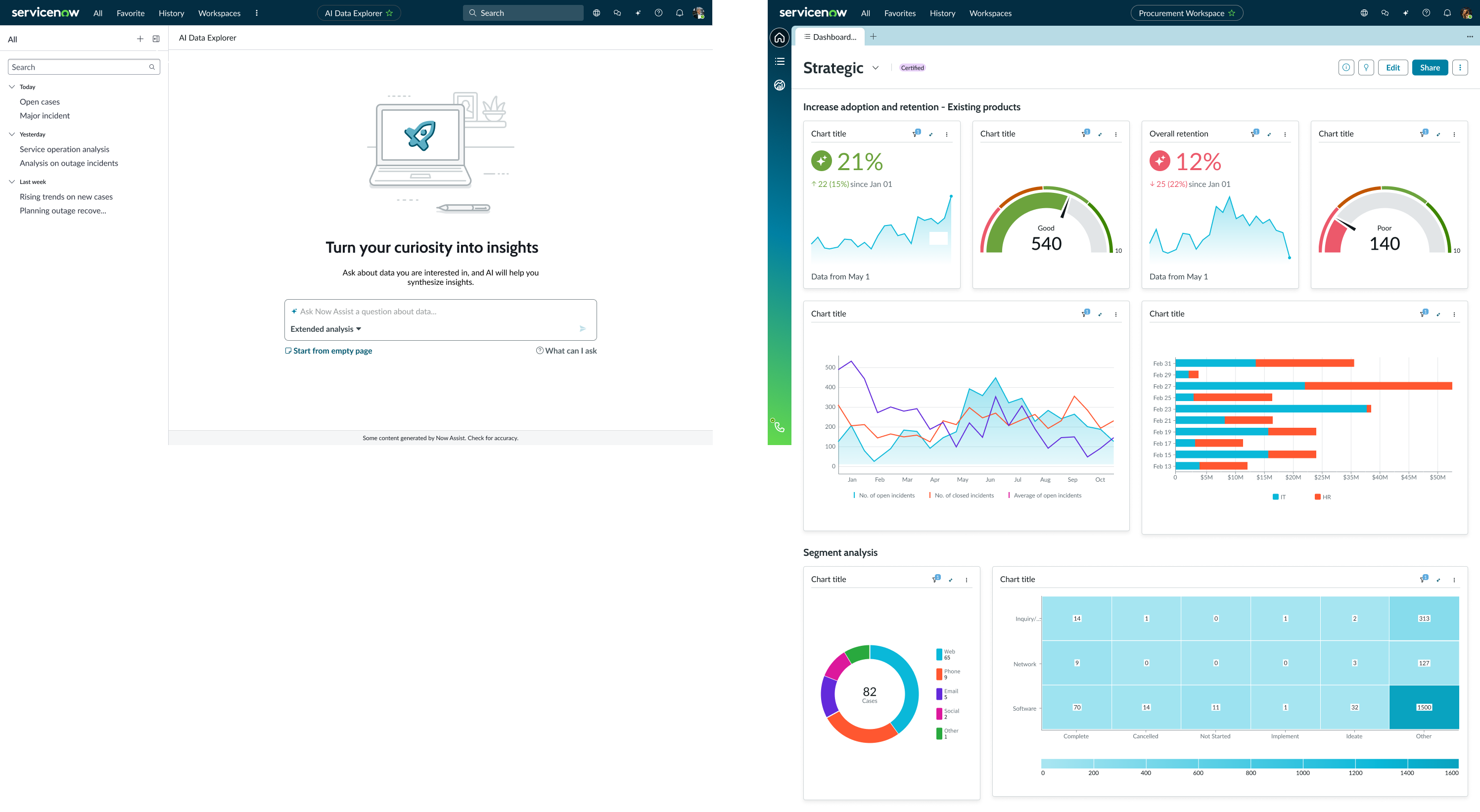

A governance framework for scaling standardized AI experiences across the enterprise.

Lead Product Designer

4 Months (Q2-Q3)

Design Systems (2 PD, 4 Eng)

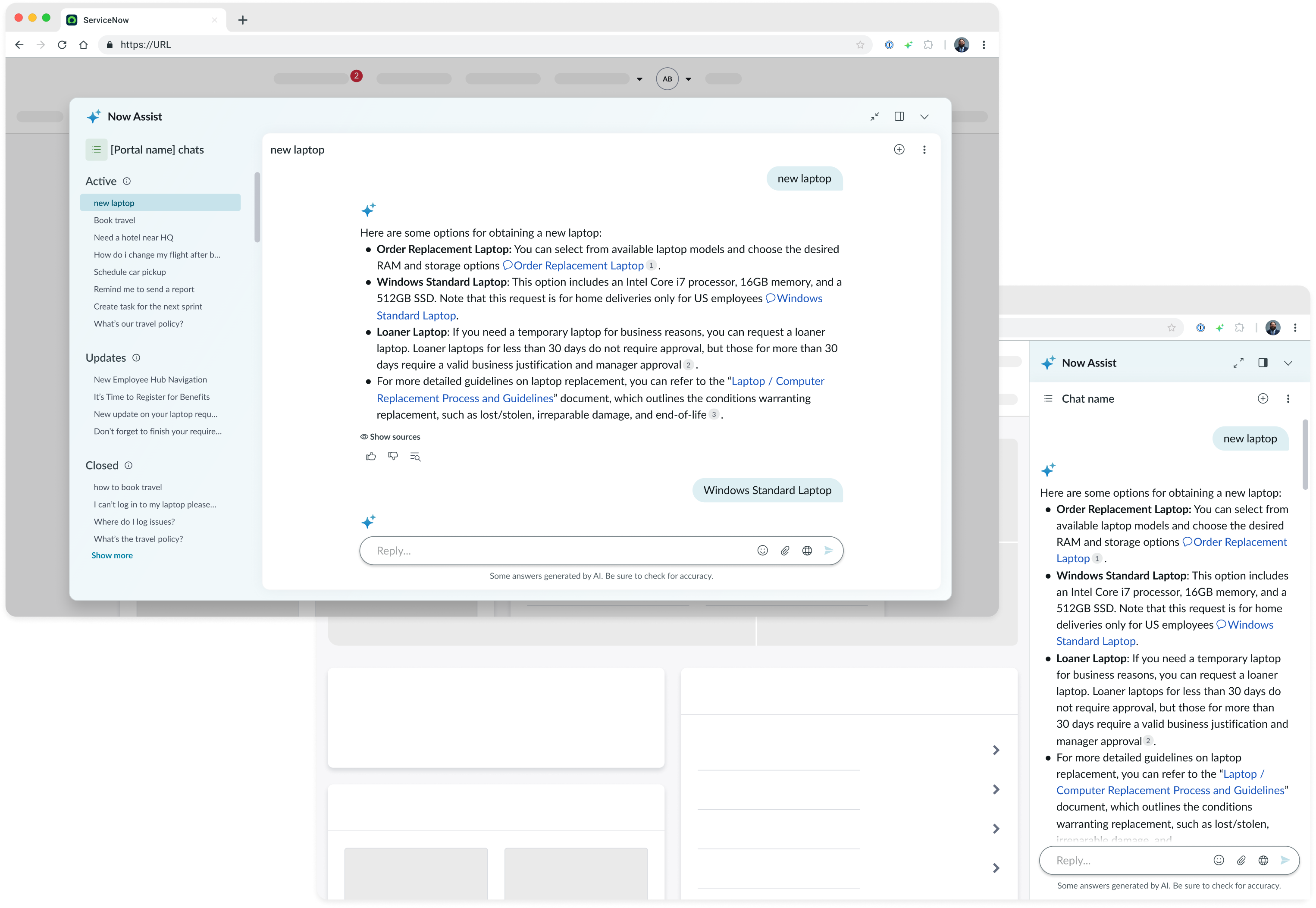

As ServiceNow rapidly integrated Generative AI into its vast ecosystem, individual product teams were building their own "chat windows" in silos. This resulted in a disjointed experience where an AI assistant in the HR portal behaved completely differently from one in the IT agent workspace.

Our audit revealed 15+ variations of the same "Chat" component existed in the codebase. Some opened as sidebars, others as floating modals. This lack of consistency created significant cognitive load for our users.

Before: Inconsistent side panels and chat layouts confusing users.

Engineers were maintaining 12+ variations of a simple chat input component.

Design called it "Modal," Dev called it "Popover," PM called it "Assistant."

Legacy patterns couldn't support new multi-turn agentic workflows.

I defined a strategy based on decoupling Anatomy from Capabilities.

1. Anatomy (Rigid): The "Shell." Window controls, history retention, input mechanisms. These are immutable to ensure the user always knows how to operate the AI.

2. Capabilities (Flexible): The "Skill." Conversational catalogs, data visualization cards, and interactive forms.

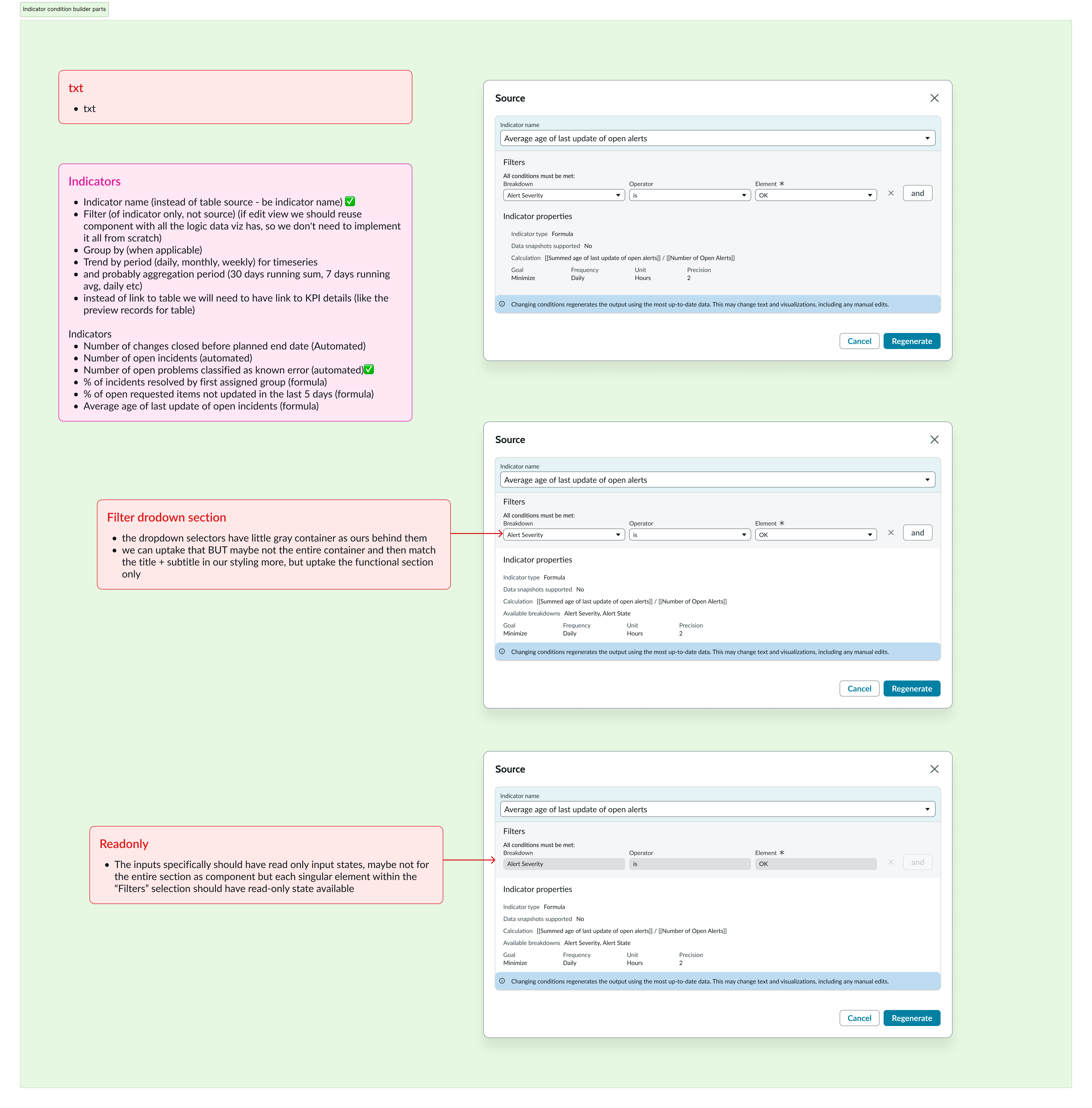

Standardizing the input field structure for consistency.

Defining how files and context are added to the conversation.

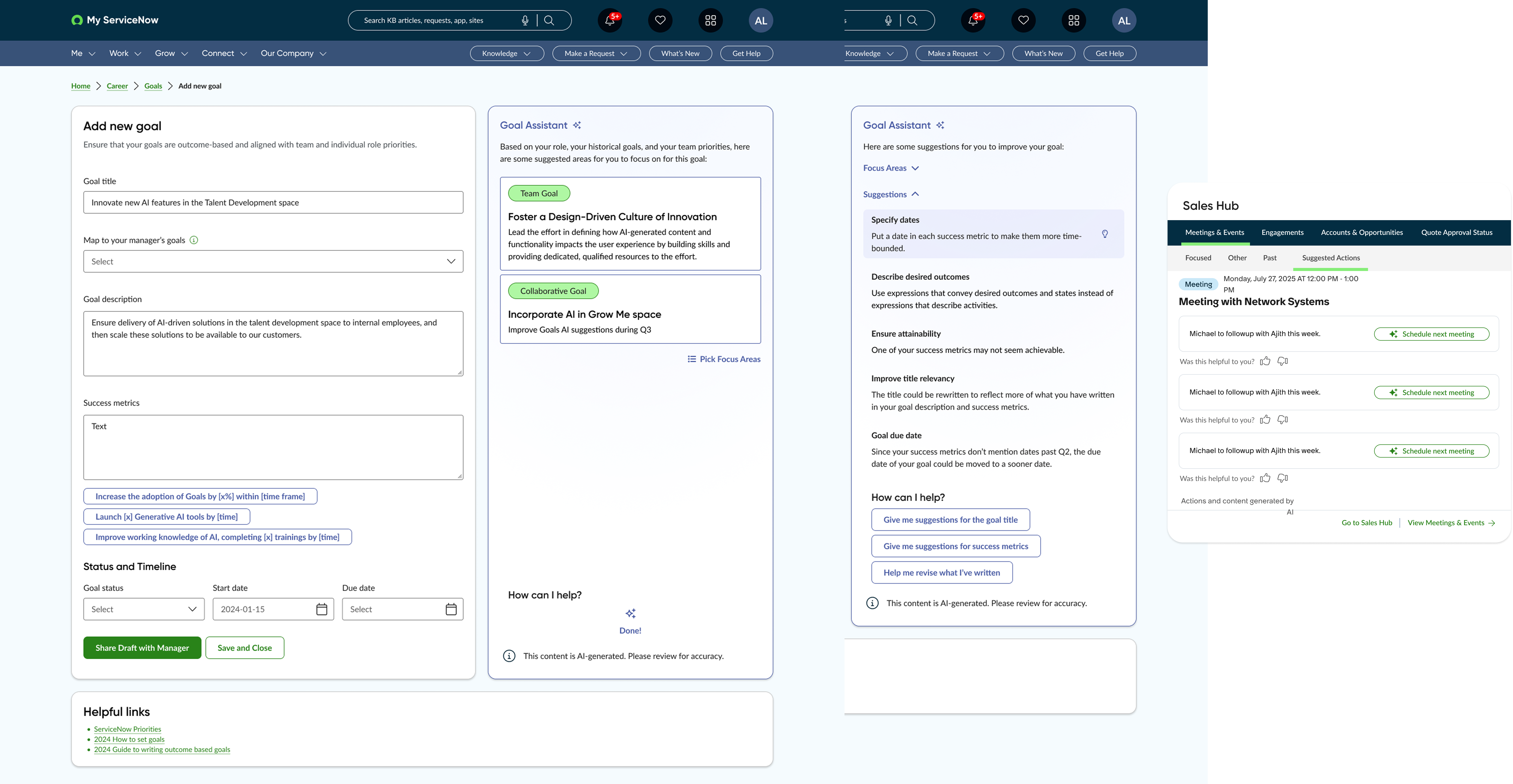

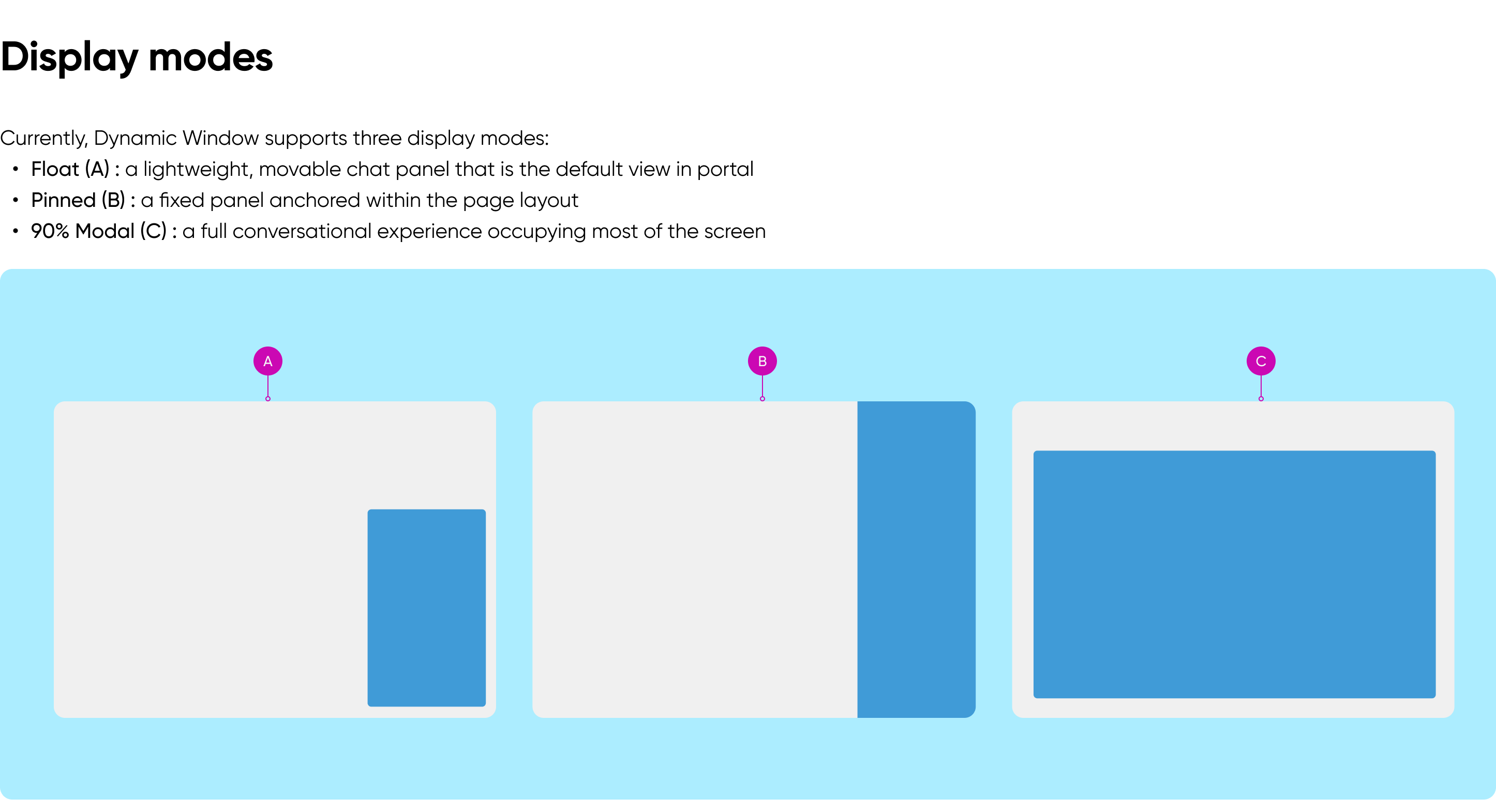

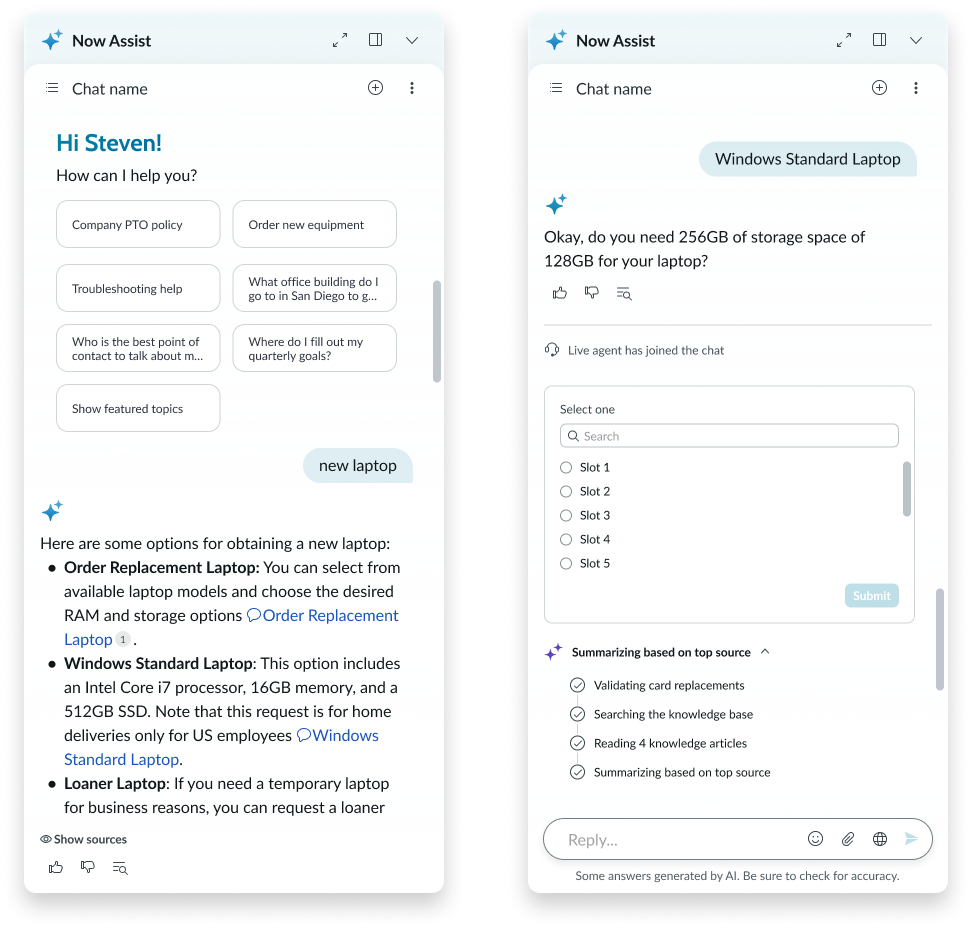

We needed the AI to adapt to the user's context. I designed a responsive system that supports three distinct modes: Float (for quick Q&A), Pinned (for side-by-side work), and Modal (for immersive, complex tasks).

Defining the 3 core interaction modes: Float, Pinned, and Modal.

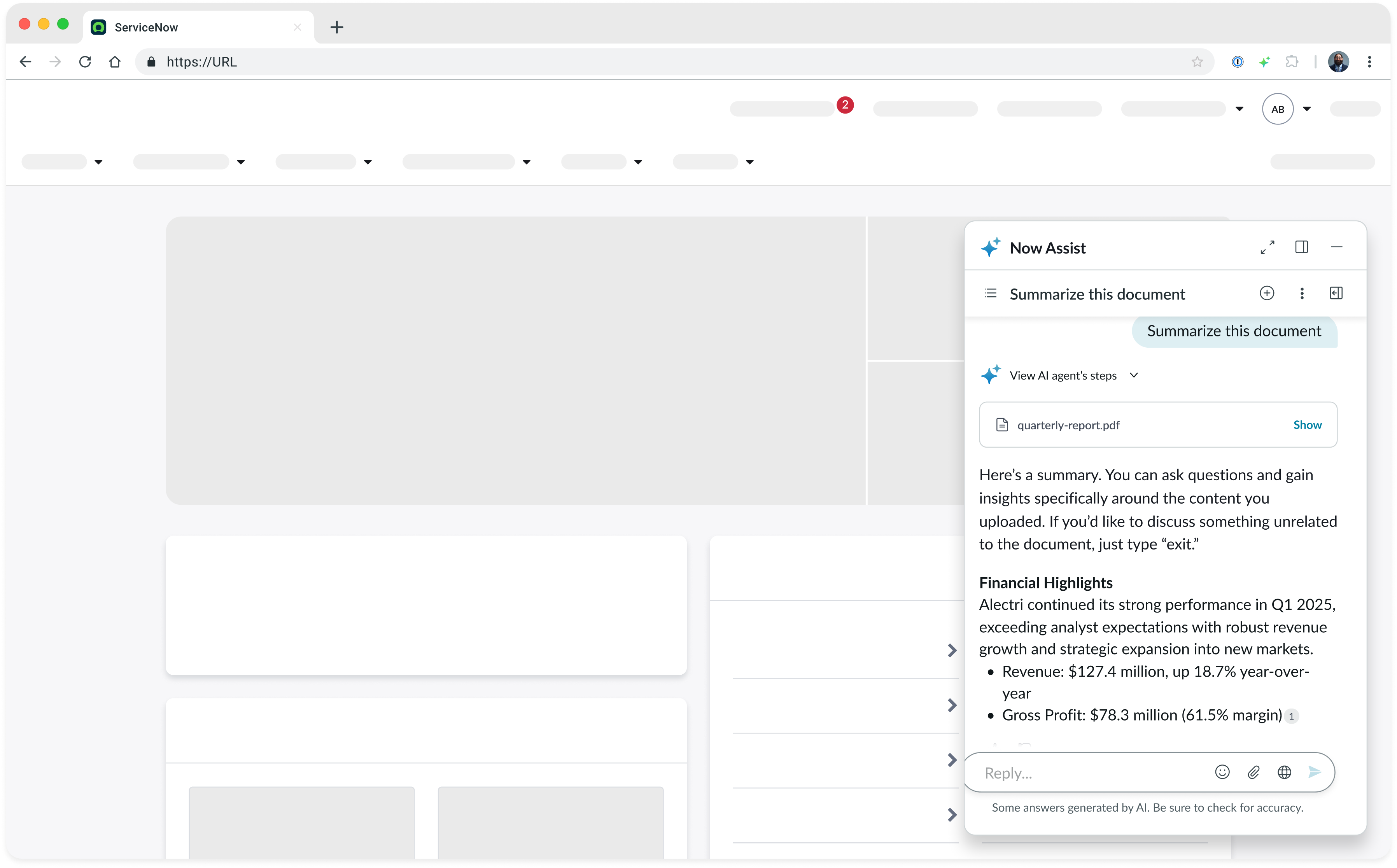

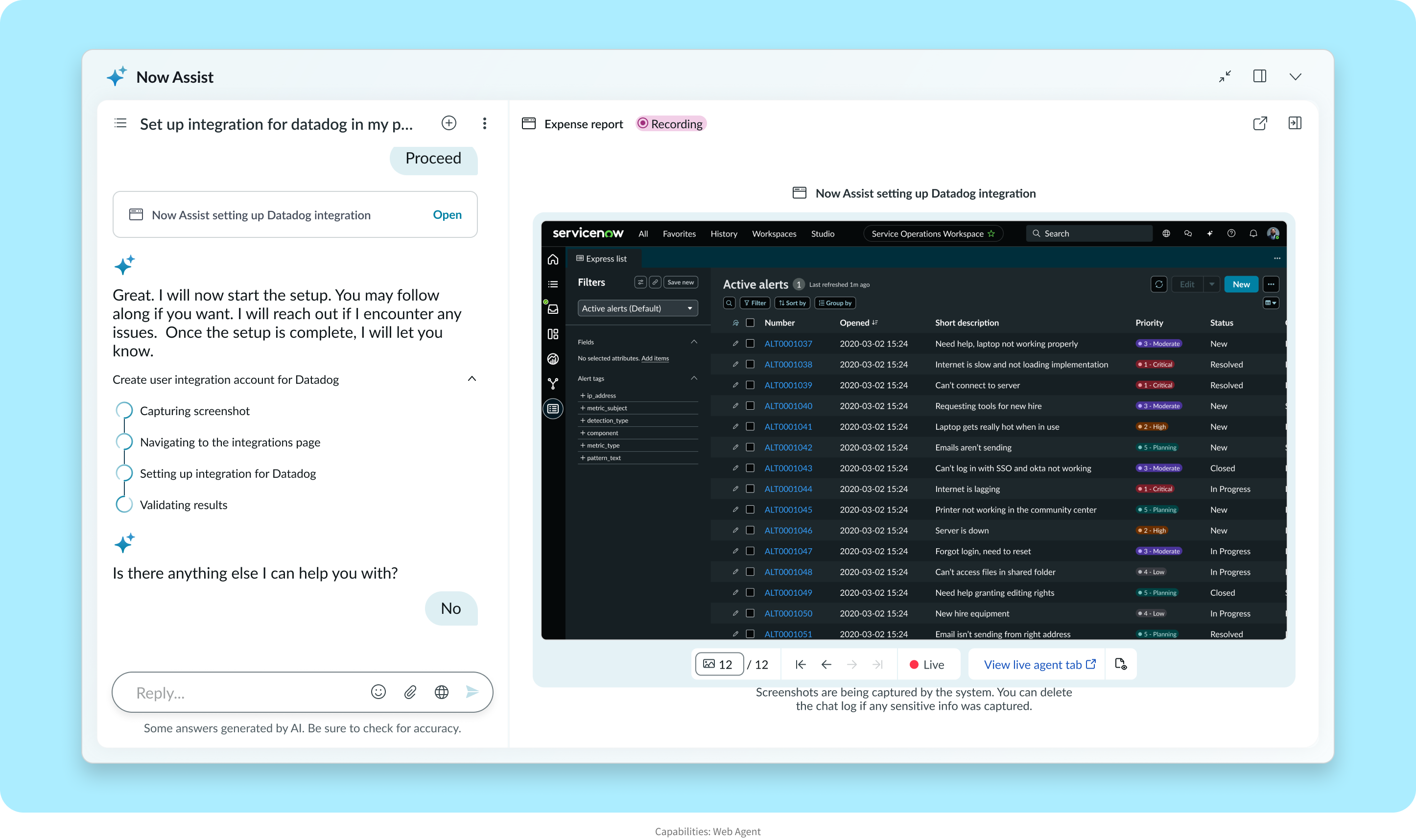

Beyond simple text, we introduced "Interactive Views." This allows the AI to render a mini-application (like a Setup Wizard or a Data Dashboard) directly within the chat stream, keeping the user in flow.

AI guiding the user through a complex integration setup.

Rendering full dashboards within the conversational modal.

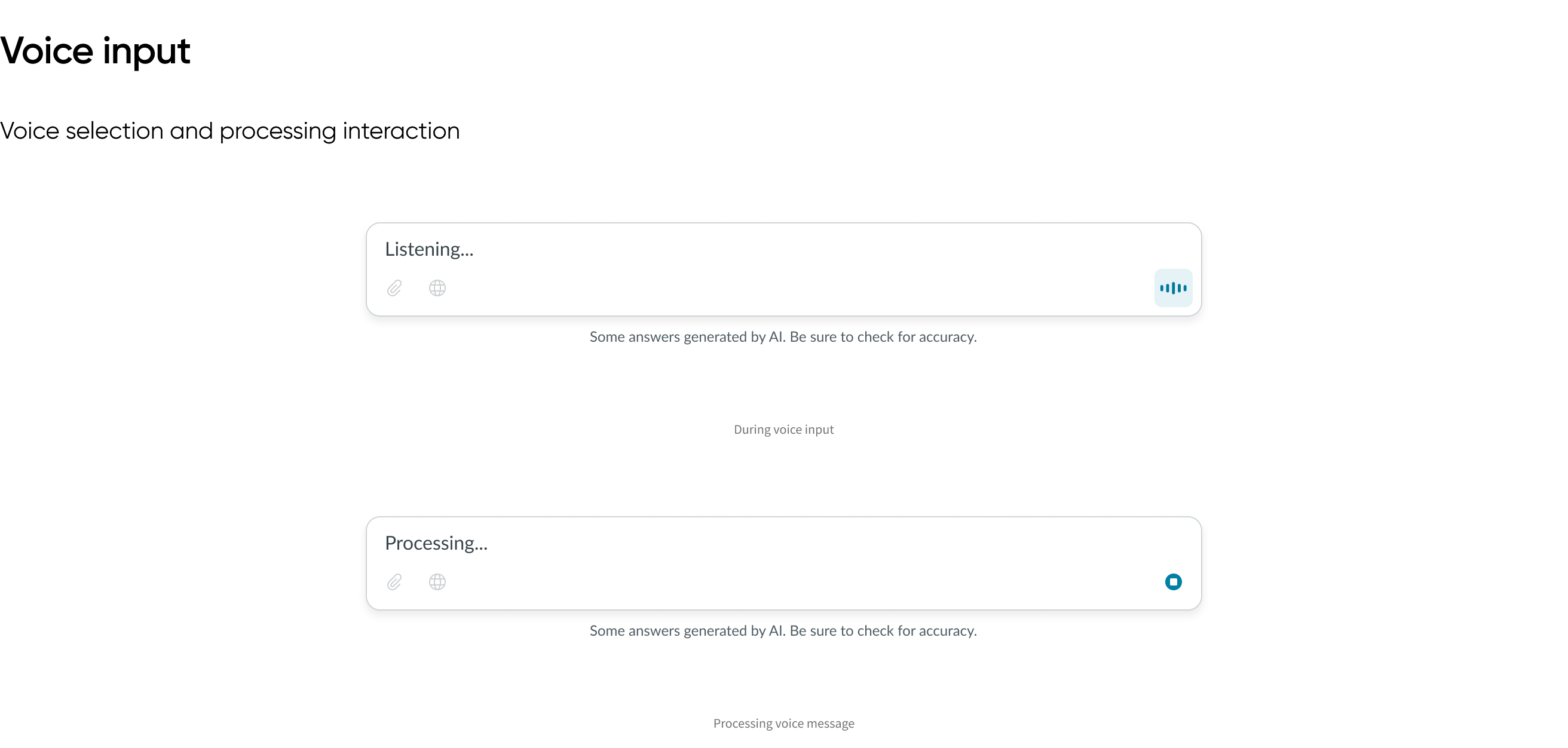

We also standardized advanced inputs like Voice, ensuring accessibility and ease of use for mobile workers.

The AIX Adoption Guide launched in Q3 and became the mandatory standard for all new AI features. By providing pre-built, accessibility-tested components, we reduced the design-to-dev handoff time by approximately 40%.

Rapidly defining the visual soul of "Now Assist" to beat the clock.

Design Lead

2 Weeks (Sprint)

"Tiger Team" (3 Designers)

With a major conference ("Knowledge") deadline looming, ServiceNow's AI features risked looking like a "Frankenstein" product. Multiple teams were building AI features in parallel with zero visual alignment. One team was using green sparkles, another blue bolts. This lack of cohesion threatened to dilute the brand impact of the launch and confuse users about which features were actually AI-powered.

We audited the current state and found that AI inputs were indistinguishable from standard search bars. Users didn't know they could "talk" to the system. We needed a way to signal "magic" without breaking the utilitarian aesthetic of the enterprise platform.

I proposed a "Design Spike"—a concentrated, time-boxed effort to solve this singular problem. We formed a small squad of 3 senior designers and gave ourselves 2 weeks to define the visual language.

Our criteria for success were: 1. Distinctiveness: It must not look like a success message (Green) or a link (Blue). 2. Accessibility: It must pass contrast ratios on light and dark modes. 3. Scalability: It must work as a 16px icon and a full-page hero background.

We explored over 20 colorways and motion studies. We landed on "Coral"—a vibrant orange-pink gradient. It was warm, human, and fundamentally different from the cool, clinical blues of the main UI.

We built a "Kit of Parts" including:

• The Sparkle Icon: The universal signifier for AI.

• Shimmer Loaders: A gradient animation that implies "thinking" rather than just "loading."

• Response Containers: A subtle border treatment to differentiate AI-generated content from human-written notes.

The impact was immediate. We distributed the library via Figma, and within 48 hours, 5 different engineering teams had updated their UIs. The consistent branding was a key talking point in the keynote presentation, creating a unified "Now Assist" identity that looked intentional and polished.

Democratizing AI creation with a No-Code "Wizard" for System Administrators.

Lead Product Designer

3 Months

Admin Experience Unit

ServiceNow is a powerful platform, but it has a notoriously steep learning curve. Historically, creating a "Virtual Agent" required knowing proprietary scripting languages and navigating a complex flow chart interface.

Our research identified a key persona: the "Accidental Admin"—often an IT generalist who needs to set up a simple Q&A bot (e.g., "What is the Wi-Fi password?") but gets blocked by the technical complexity of our legacy tools. They were spending weeks configuring basic logic that should have taken minutes.

We defined the vision for "Admin Studio"—a centralized hub for AI creation. The core philosophy was "Progressive Disclosure." We would show the simple, happy-path options first, and tuck the advanced configuration (temperature settings, API hooks) behind an "Advanced" toggle.

I designed a "Guided Setup" wizard. Instead of dropping the user onto a blank canvas (which causes "blank page paralysis"), the system asks 4 simple questions:

1. Who is this agent for? (e.g., Employees in London)

2. What triggers it? (e.g., Questions about VPN)

3. What should it do? (e.g., Search the Knowledge Base)

4. Where should it live? (e.g., Slack and Web Portal)

Based on these inputs, the system auto-generates the underlying flow logic. I built high-fidelity prototypes demonstrating this "text-to-app" flow, which clarified the requirements for the engineering team and proved that a no-code solution was feasible.

These prototypes were used to secure executive buy-in for the Q4 roadmap. We shifted the engineering strategy from "building more flow features" to "building a simplified layer on top of flow." This pivot is expected to reduce the Time-to-Deployment for a standard agent from 2 weeks to 30 minutes.

Replacing SQL queries with Natural Language to democratize data analytics.

Lead Designer

TBD

Analytics Unit

ServiceNow holds vast amounts of enterprise data, but accessing it required using complex "Report Builders" that demanded knowledge of database schemas, table names, and filtering logic.

Our research showed that Business Analysts often knew what they wanted (e.g., "Show me P1 incidents in London last week") but failed to build the report because they couldn't find the right table or filter operator. They were dependent on data scientists, creating a bottleneck that delayed decision-making by days or weeks.

We focused on "Natural Language Querying" (NLQ). The key challenge wasn't just translating text to SQL; it was handling ambiguity. If a user says "Show me high priority tickets," do they mean Priority 1, or Priority 1 and 2? We defined a "Refinement Loop" strategy where the AI asks clarifying questions rather than guessing.

Transforming fragmented HR processes into a seamless, orchestrated conversation.

Lead Product Designer

6 Months (End-to-End)

HRSD Unit (3 Devs, 1 PM)

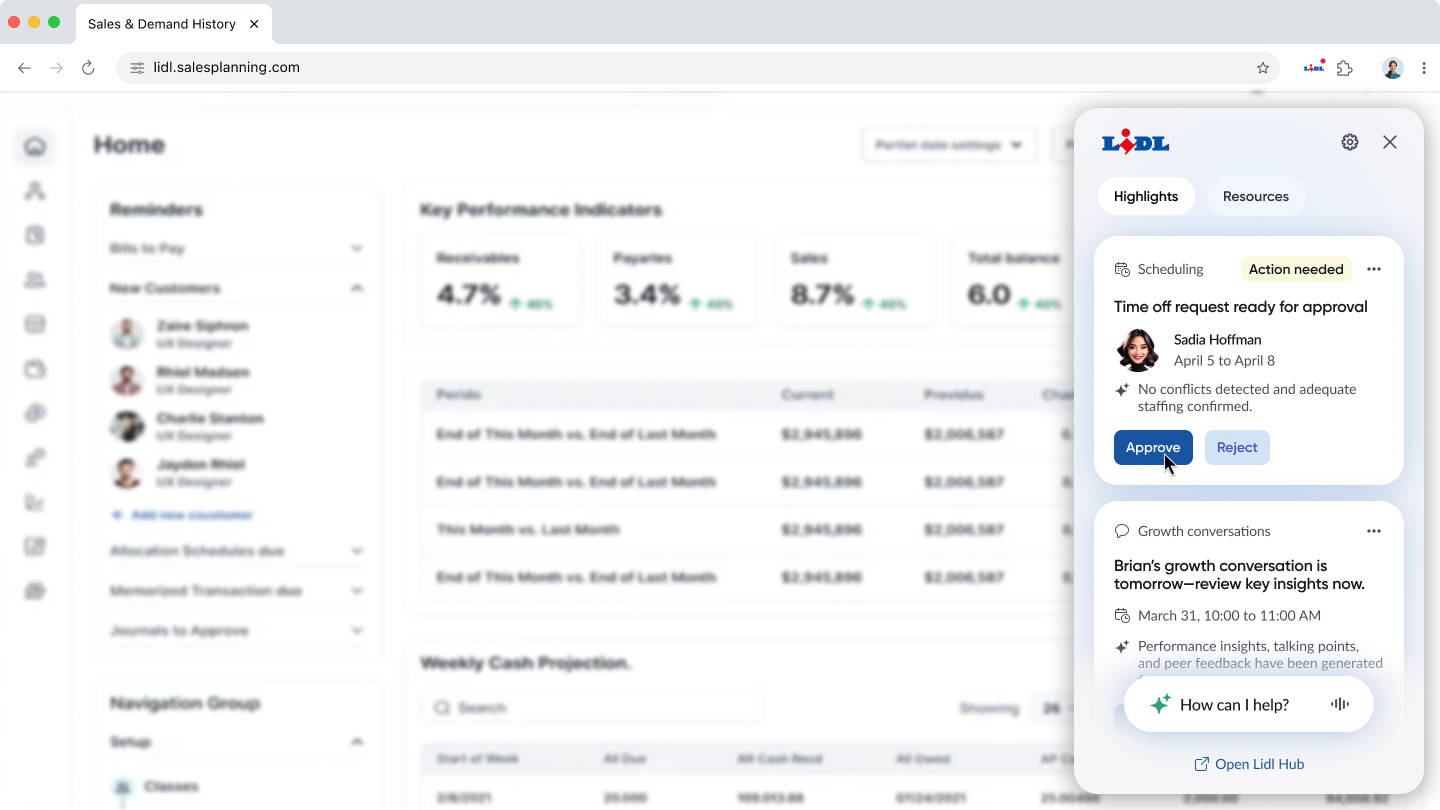

In the modern enterprise, simple life events trigger complex administrative burdens. Our research revealed that an employee going on parental leave touches an average of 4 distinct systems: the Time-Off portal to log dates, the Benefits portal to add dependents, the Payroll system to adjust tax withholding, and often a separate IT portal to return equipment.

This fragmentation creates high cognitive load during an already stressful life transition. Employees were "swivel-chairing" between tabs, often missing critical steps which resulted in pay discrepancies or lapsed insurance coverage. HR Service Centers were overwhelmed with Tier-1 tickets simply guiding users on where to click.

Users lose context when jumping between disparate legacy systems.

Manual coordination leads to missed steps and compliance risks.

Simple requests took days due to back-and-forth ticketing.

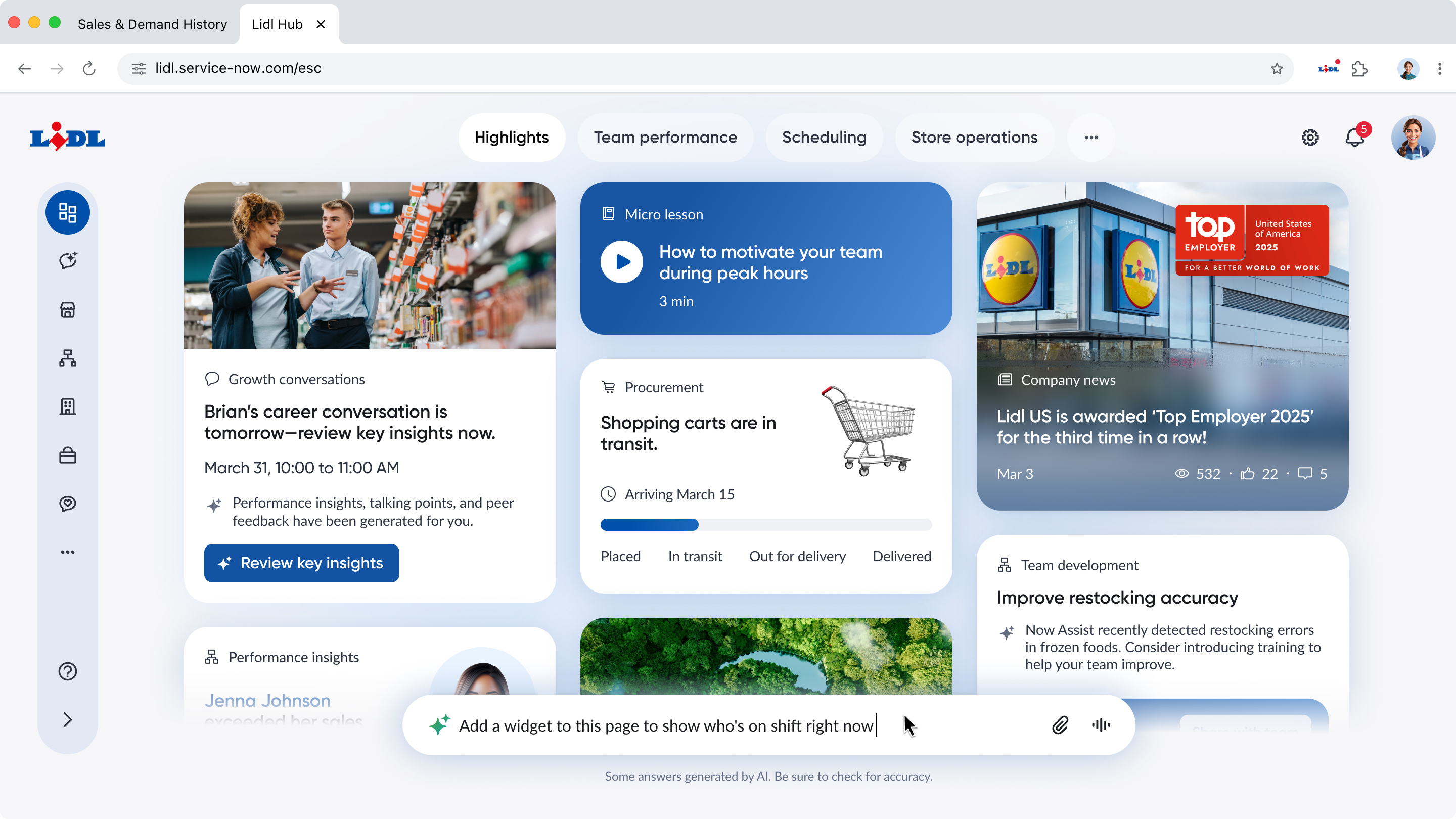

The Current State: A density of information that requires the user to hunt for the right module.

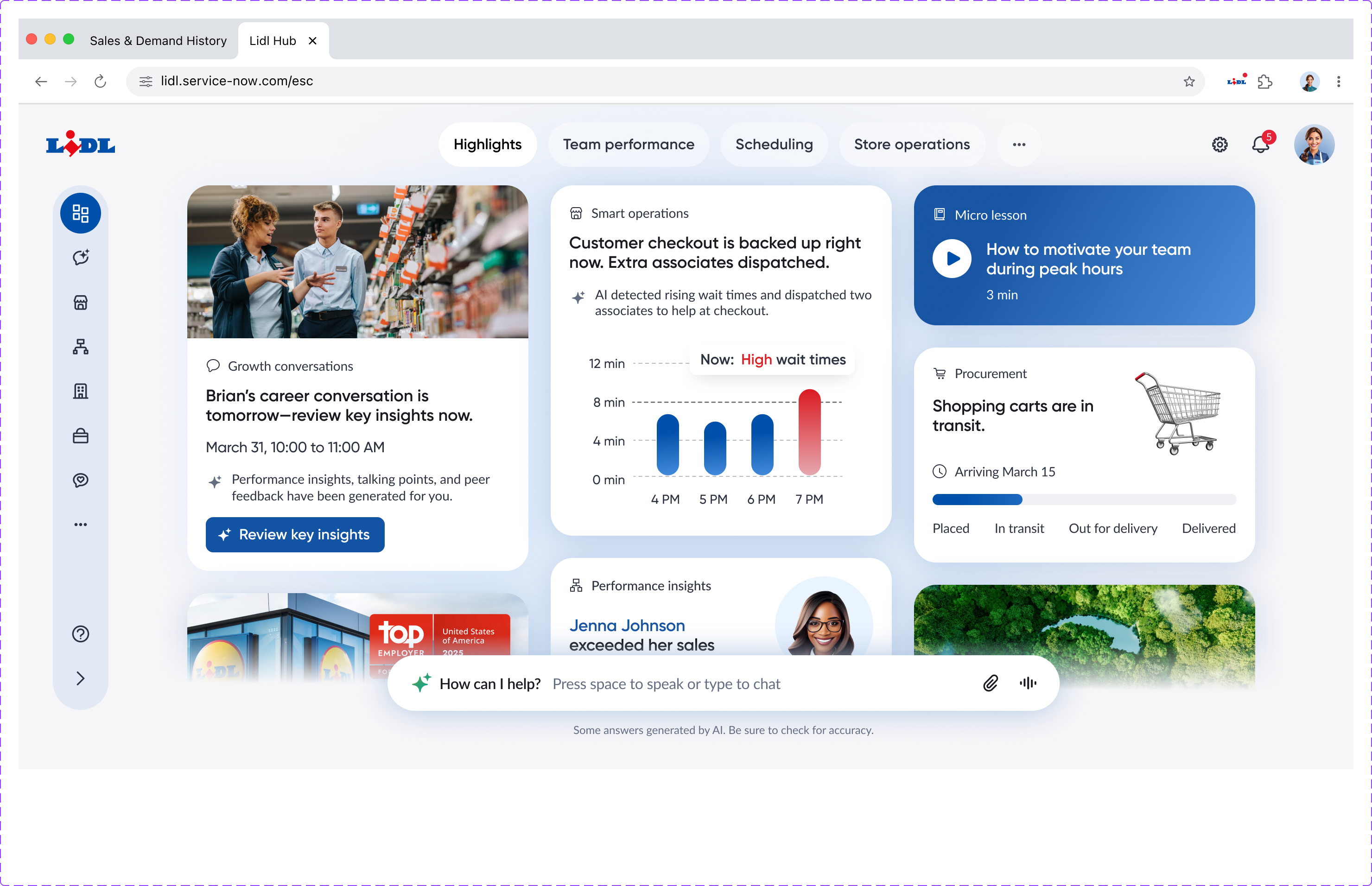

We moved away from the concept of a "Chatbot" (which typically just retrieves articles) to an "Agentic Orchestrator." The core strategy was to build a "General Contractor" agent that sits at the top level. It doesn't know the specifics of tax law, but it knows which sub-agent does.

When a user expresses a complex intent like "I'm having a baby," the Orchestrator breaks this down into a dependency tree and spins up the specialized sub-agents (Leave Agent, Benefits Agent, IT Agent) to execute the tasks in the correct order, shielding the user from the backend complexity.

The primary design challenge was Trust & Transparency. If an AI is acting on your behalf (changing payroll), you need to feel in absolute control. I developed a "Plan & Execute" UI pattern.

Instead of the agent simply saying "Done," it presents a Summary Card: "I understand you want to go on leave. To do this, I will: 1. Submit request for May 1st, 2. Update benefits, 3. Set OOO reply. Do you want me to proceed?" This "Human-in-the-loop" confirmation step was critical for adoption.

User speaks naturally; Orchestrator parses intent.

Sidebar agent handles approvals without leaving the hub.

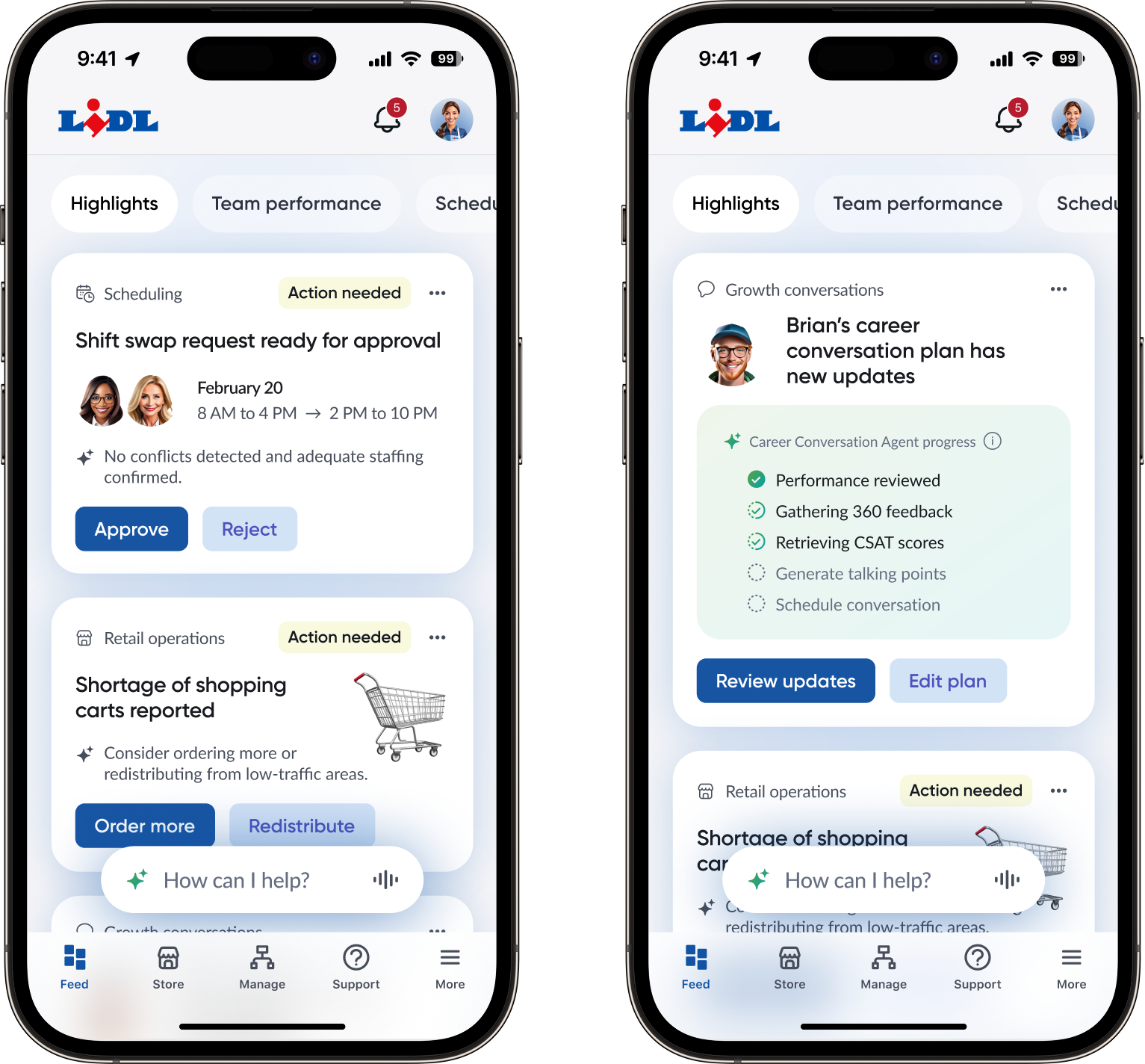

We went a step further with "Smart Operations." The system detects patterns (e.g., a sudden spike in checkout wait times or inventory depletion) and proactively prompts the manager with a solution, shifting the paradigm from "Request/Response" to "Sense/Respond."

The Agentic HR Assistant has fundamentally changed how employees interact with enterprise systems. We effectively collapsed 4 different portals into 1 chat window.

Establishing trust through Explainability, Progressive Disclosure, and Ethical Design.

Principal Designer

Ongoing

Design Systems

Pattern Readout Deck

Our internal research on "Ethical AI" uncovered a disturbing trend: users were rejecting valid AI suggestions because they didn't understand the provenance of the data. In user testing, participants referred to the AI as a "black box" and hesitated to delegate high-stakes tasks (like approving a budget) without oversight.

Furthermore, we found significant Discoverability Issues. Fulfillers struggled to recognize AI-enabled features because every product team used a different icon—some used a robot, some a lightning bolt, some a brain. This cognitive friction meant valuable productivity tools were being ignored simply because users didn't know they existed.

"I don't know where this data came from."

Users missed AI features due to poor iconography.

Lack of "human-in-the-loop" controls.

We aligned our design strategy with the core principles of Ethical AI: Fairness, Explainability, and Oversight. We determined that every AI interaction must answer three questions for the user: 1. Why are you showing me this? 2. Where did you get this info? 3. How can I undo this?

I architected a library of 3 core patterns to solve these specific friction points:

The Problem: Popups break flow.

The Solution: Borrowing from coding IDEs, we implemented "Ghost Text" inside form fields. This is low-friction and non-blocking. Crucially, we standardized the interaction: Tab to accept, Keep typing to ignore. This puts the user in the driver's seat, framing the AI as a copilot, not a commander.

The Problem: Hallucination fear.

The Solution: Every high-stakes prediction (e.g., Risk Score) now includes a standardized "Why this?" link. Clicking it opens a non-intrusive modal that cites the source data (e.g., "Based on 5 similar incidents resolved by Agent Smith"). This citation view is the cornerstone of our Explainability strategy.

To ensure long-term compliance, we established an AI Governance Board. I created role-based documentation for "AI Stewards"—a new persona we identified in our research—who are responsible for auditing AI performance. The patterns I designed are now baked into the "Polaris" design system, ensuring that any future AI feature automatically inherits these ethical safeguards.